DeiT: Data-Efficient Image Transformer

Training data-efficient image transforms & distillation through attention

Introduction

The goal of this work is not to surpass the current state-of-the-art (SOTA) in image classification, but rather to advance the capabilities of image transformers. While Vision Transformer (ViT) introduced the idea of using transformers for image tasks, it revealed that transformer-based models struggle to generalize effectively when trained with limited data. To address this issue, this research focuses on two approaches: data augmentation and data distillation.

Methods

Data Distillation

In standard classification with Transformers, a class token [CLS] is typically added at the beginning of the input sequence. The input is then processed through Transformer layers and the final embedding of the [CLS] token is used for classification and label prediction.

DeiT (Data-efficient image Transformers) expands on this approach by introducing a distillation token [DLS] at the end of the input sequence. The embedding of this token is utilized to predict a hard label, which is generated by a more advanced teacher classifier. This teacher classifier can be based on either convolutional or transformer-based architectures.

Essentially, DeiT leverages two tasks and two corresponding labels during training. One label is obtained from the training dataset itself, while the other label is derived from the predictions of the teacher classifier. This enables DeiT to learn from both sources of information and enhance its overall performance.

Data Augmentation

When training a transformer for vision tasks with limited data, it often leads to suboptimal performance. This research delves into the exploration of multiple data augmentation and regularization techniques to identify the most effective combination. Without these enhancements, the results would be comparable to the existing Vision Transformer (ViT) without significant improvements.

This paper incorporates several data augmentation techniques such as Rand-Augment, random erasing, and repeated augmentation. Additionally, regularization techniques including Mixup, CutMix, and stochastic depth are employed. By leveraging these methods, the aim is to improve the generalization and performance of the transformer model in the context of limited training data.

Result

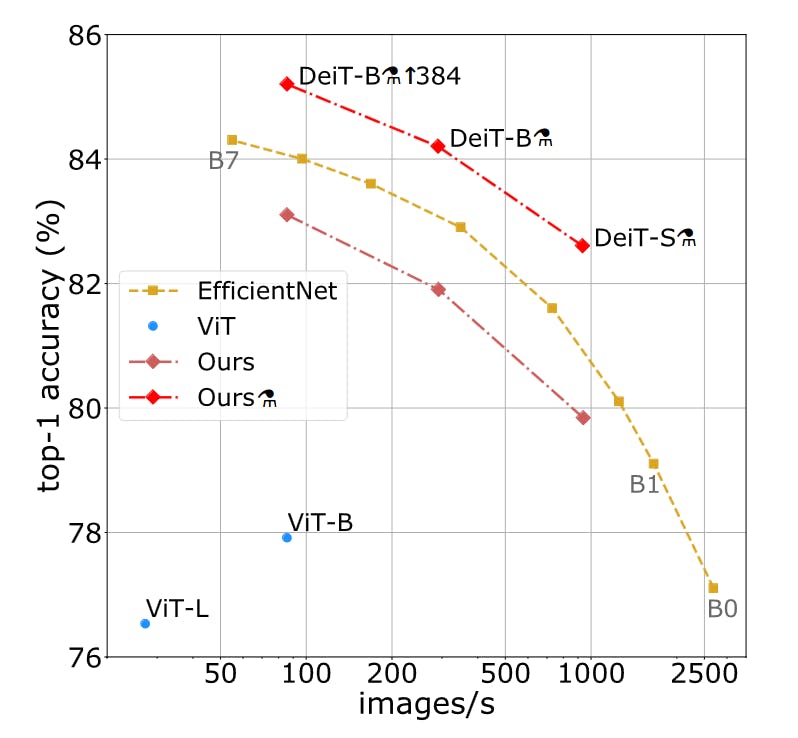

DeiT-B serves as the foundational model for DeiT, without the distillation component. Surprisingly, a straightforward implementation of DeiT-B with effective data augmentation and regularization techniques surpasses the performance of the Vision Transformer (ViT). Furthermore, with the distillation token included, DeiT-Bm demonstrates further performance gains. This highlights the crucial role played by both data augmentation and the distillation token in training Transformers for vision tasks.

Key Takeaway

Data augmentation plays an important role in training Transformers on vision tasks.

The performance can be further enhanced by appending a distillation token

[DIS]at the end of the input and predicting it against a superior teacher classifier.

Reference

Touvron, H., Cord, M., Douze, M., Massa, F., Sablayrolles, A., & Jégou, H. (2021, July). Training data-efficient image transformers & distillation through attention. In International conference on machine learning (pp. 10347-10357). PMLR.