DiT: Unlocking Document Understanding with Unsupervised Pretraining

Alternative Pretraining Models Beyond VGG/ResNet

What’s Document Understanding?

Document understanding involves the analysis and interpretation of various document formats, such as PDFs, Microsoft Word, and PowerPoint. To unify these formats, a common approach is to convert them into images, such as JPEGs, which can then be processed by AI models. In this context, document understanding tasks resemble image understanding, but instead of identifying objects like cats and dogs, the goal is to comprehend text, tables, and figures within the document.

Document AI tasks encompass a range of topics, including document image classification, document layout analysis, table detection, text detection for optical character recognition (OCR), question answering, and more.

Document image classification: This task involves determining the type of document based on an input image.

Document layout analysis: The goal is to understand the structure or layout of a document by identifying and bounding text, headers, tables, and figures.

Table detection: This task is a subset of document layout analysis and focuses on identifying the location of tables within a page.

Text detection: Unlike detecting large blocks of text spanning multiple lines, this task involves highlighting individual lines of text. It is particularly useful for receipt understanding.

DocQA: Given a document, users can ask questions about its content.

Document Image Transformer (DiT)

Many popular pre-trained models, such as ResNet or VGG, are designed to recognize objects like animals, cars, or other general visual categories. They excel at identifying features like legs and eyes in images. However, since these models have not been trained specifically on text, they lack an understanding of textual content. As a result, when we attempt to apply these pre-trained models to document AI tasks, their performance may be limited or sub-optimal.

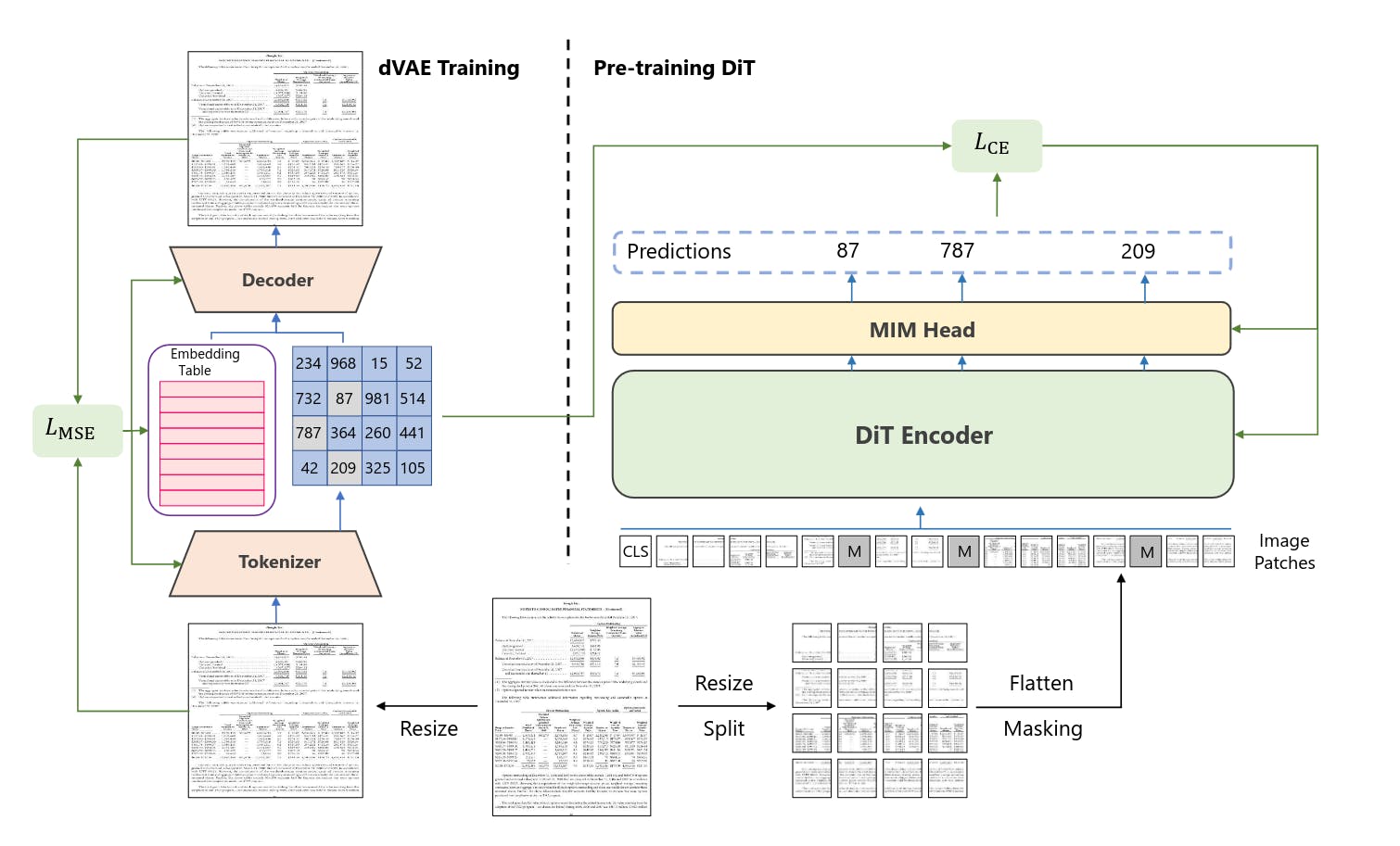

The Document Image Transformer (DiT) is a solution designed to address the limitations of pre-trained models when it comes to understanding texts in document images. To train DiT, millions of document images are used in an unsupervised manner. Typically, when a model learns from images without labels, it encodes the image into a vector and attempts to reconstruct the original image. In the case of DiT, the learning process involves dividing an image into smaller patches, forming an N by N grid. Each patch represents a segment of the image and is assigned a discrete visual token. Think of a visual token as a representation of an image segment. For example, patches that resemble text headings are assigned one label, while those that resemble the edges of figures are assigned another label. Once the image is divided into these patches, they are fed into a Transformer model, which aims to predict the discrete visual token associated with each patch.

To determine the labels for each image patch, we rely on the image tokenizer, which is depicted in the left panel of the provided image. In the realm of text, a tokenizer splits a sentence into smaller units called tokens or subwords, and each token is assigned a number. Similarly, in the context of images, an image is divided into multiple visual tokens, and each token is also represented by a number.

Prior to training the Document Image Transformer (DiT), we employ dVAE (discrete Variational Autoencoder) training to obtain a reliable image tokenizer. The process involves tokenizing an image to generate its visual tokens. These tokens are then fed into a decoder module that aims to reconstruct the original image as accurately as possible. The goal is to produce a reconstruction that closely resembles the original image. By achieving a high-quality reconstruction, we enhance the ability of the visual tokens to effectively represent an image.

Fine-Tuning for Document AI Tasks

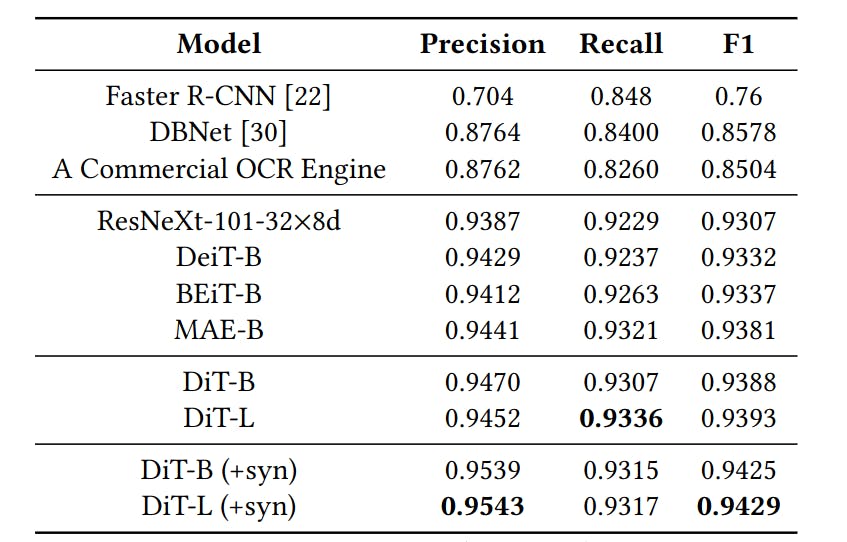

Now that we have the pre-trained model, it’s time we test it on specific tasks. DiT was experimented on document image classification, document layout analysis, table detection, and text detection. The two figures below show the performance when fine-tuned on document layout and text detection. It shows that the pre-trained model DiT outperforms other models. But that does not mean other previous models are bad. Their scores are substantially high with scores of over 90%. DiT simply brings the score even higher.

Performance on document layout analysis using PubLayNet dataset. C stands for Cascade.

Performance on text detection using FUNSD dataset.

Keep in mind that DiT by itself can not be used directly for each task. Instead, it is fine-tuned specifically to each task with extra modules and architecture. The idea is to DiT as a pre-trained model to assist in improving performance.

DiT In Practice

Now that we get the concept. Let’s look at how we can use it. Instead of coding from scratch, we can use the Detectron2 framework or HuggingFace to quickly set it up.

HuggingFace has documentation on how to get started with the DiT model. This would select only the DiT encoder so that you can get an embedding for each image patch. Note that the MIM header, for recognizing the visual token, is not included.

from transformers import AutoModel

model = AutoModel.from_pretrained("microsoft/dit-base")

The code provided earlier primarily focuses on the pre-trained weights of the model. However, if we want to specifically tackle document layout analysis, we need to incorporate additional modules and perform fine-tuning. Fortunately, the author has shared the weights for this task. And there is also a HuggingFace space associated with it. The following snippets are excerpts taken from that space.

# Setup

# DiT extends ViT configuration. So, we fetch that from unilm

import os

import sys

os.system('pip install detectron2')

os.system("git clone <https://github.com/microsoft/unilm.git>")

sys.path.append("unilm")

# import libraries

from unilm.dit.object_detection.ditod import add_vit_config

import detectron2

# Setup detectron config

cfg = detectron2.config.get_cfg()

add_vit_config(cfg)

cfg.merge_from_file("cascade_dit_base.yml")

cfg.MODEL.WEIGHTS = "<https://layoutlm.blob.core.windows.net/dit/dit-fts/publaynet_dit-b_cascade.pth>"

# Load the model

predictor = DefaultPredictor(cfg)

output = predictor(target_image)["instances"]

Note that:

cascade_dit_base.ymlcan be found in this HF space.The code above is less buggy when used with Python ≤ 3.9. Python with a higher version can have problems when importing

add_vit_config.

Key Takeaway

Document Image Transformer (DiT) is a pre-trained model specifically designed for document understanding tasks. It can improve in various areas such as document classification, layout analysis, table detection, and text detection.

DiT is trained in an unsupervised manner using a two-step process. First, an image tokenizer is trained to learn a set of visual tokens for each image patch. Then, the DiT model is trained by masking each image patch and predicting its corresponding visual tokens. This approach enables the model to gain an understanding of the content and structure of document images.

DiT can be implemented using frameworks such as Detectron2 or HuggingFace, allowing developers and researchers to easily utilize and integrate the model into their document understanding workflows.

Conclusion

In conclusion, Document Image Transformer (DiT) is a promising pre-trained model for document understanding tasks. It is trained in an unsupervised manner and has shown impressive results for document classification, layout analysis, table detection, and text detection. DiT can be run using frameworks like Detectron2 or HuggingFace, making it easily accessible for developers and researchers. With its potential for improving document understanding, DiT is definitely a model to keep an eye on in the future.

Reference

Li, J., Xu, Y., Lv, T., Cui, L., Zhang, C., & Wei, F. (2022). DiT: Self-supervised Pre-training for Document Image Transformer. Proceedings of the 30th ACM International Conference on Multimedia. (https://arxiv.org/abs/2203.02378)

https://huggingface.co/spaces/nielsr/dit-document-layout-analysis