Toward Better Sentence Embedding with BERT

Using a Transformer-based model to get vector representation for a sentence

Problem

When it comes to comparing two sentences, the original BERT model suggests concatenating two sentences together separated by [SEP] token, and feeding that long sentence into the model to get the score. But as you may have guessed, that’s not practical. Suppose you have 1000 sentences and you want to find a pair that are mostly similar to each other. That would be 0.5M comparisons to cover all combinations. And the BERT model would need to handle all those comparisons. That is not practical considering the model itself is huge and requires heavy computation for each comparison.

You may have thought, why don’t we just take the embedding of [CLS] of the sentence and use that to represent the sentence? We can even average all the tokens in Transformer and use that as the sentence embedding. Technically, it's possible, but these techniques (straight out of the box) are proven not to be effective in generating proper vectors for sentence representation.

Solution

That’s when this Sentence-BERT comes in. The goal is to fine-tune Transformer-based models, e.g., BERT to produce a better embedding for a sentence. They propose two approaches to do this.

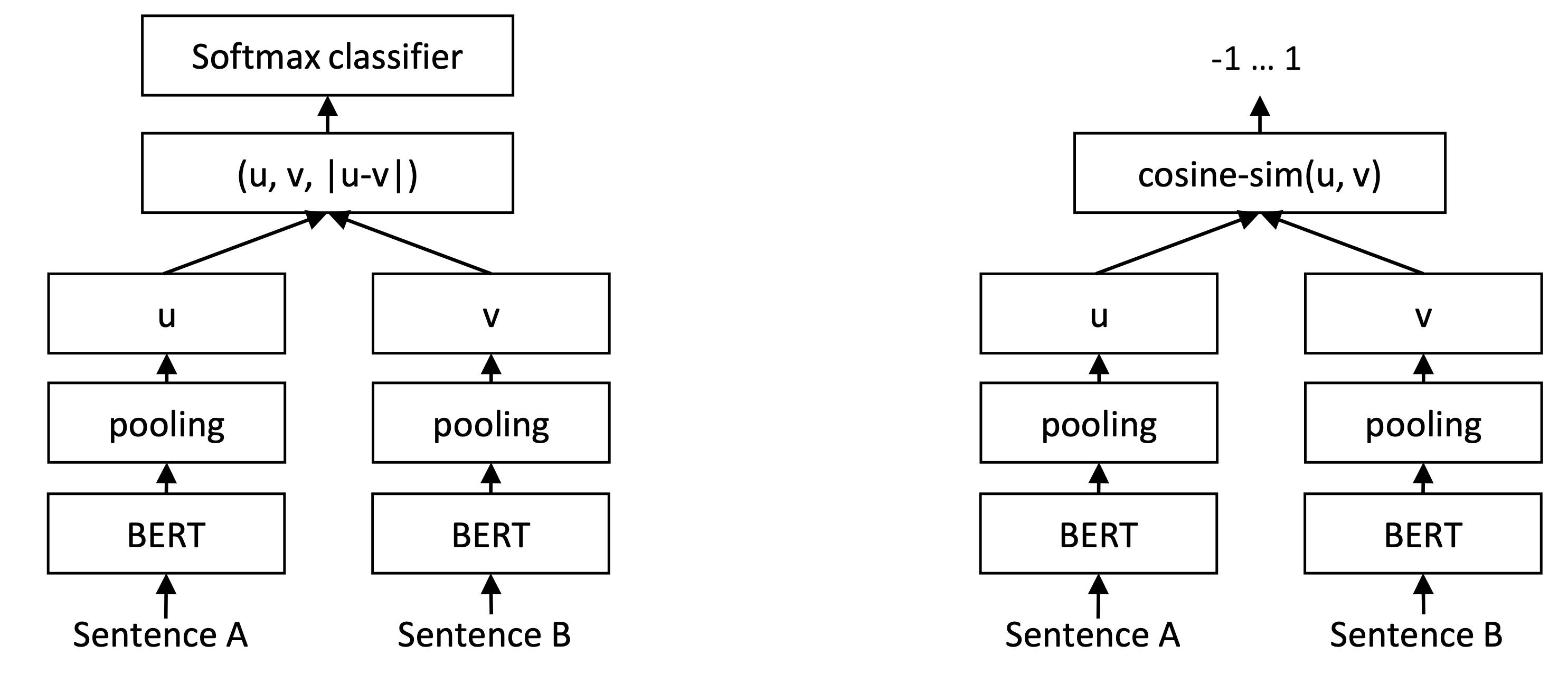

Left: Siamese Network. Right: Cosine-similarity based network

Siamese Network

Take the BERT model to get the embedding for each sentence via mean pooling. But of course, it ain't stop there. They use that embedding and concatenate with another sentence’s embedding (along with some other processing concatenation), and feed that to a dense layer to run the prediction.

Depending on some datasets, a triple loss objective function is also used. So, the idea is instead of classifying whether two sentences are related, there is a third sentence that should be more similar to one sentence than the other, and the model is trained to recognize which one is more related. When such data is not available, classic classification (sigmoid or softmax) is used.

Note that these concatenations of multiple sentences are only for training. During inference, we are only interested in the embedding produced by the BERT model for one sentence.

Cosine-Similarity-based Network.

Another approach is again to run a sentence through the BERT model and takes its embedding by average pooling. But after that, it is used to compare with another sentence using a cosine similarity score.

Results

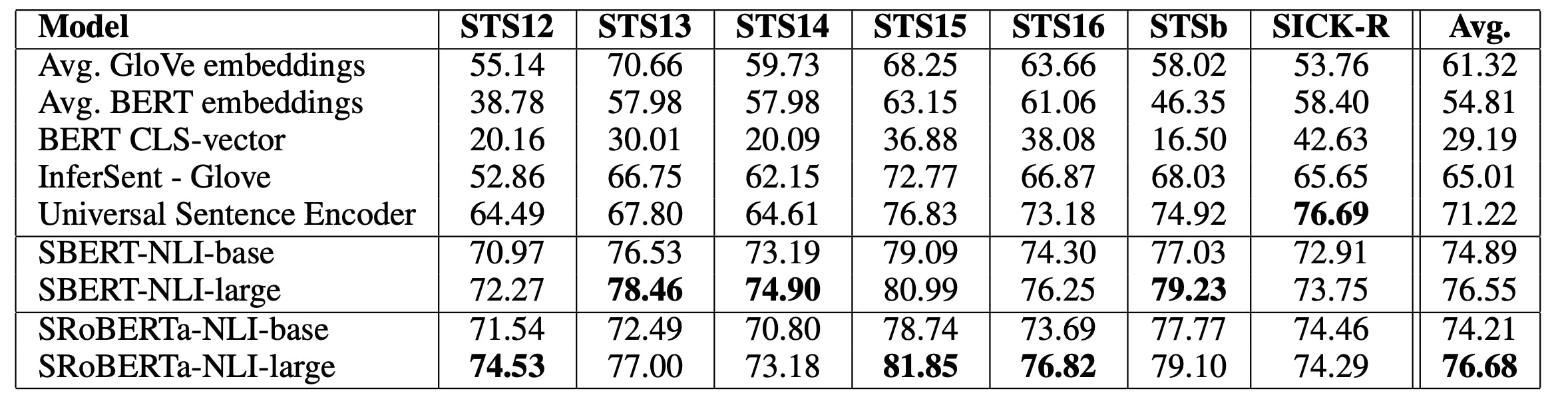

Here are some results of the proposed approaches. The higher the score, the better the model can represent a sentence. As the figure suggests, [CLS] token is even worst than just averaging GloVe embedding (pretrained embedding for each word). The SBERT approach shows a very promising direction in generating a well-represented vector for a sentence.

SBERT is Sentence BERT. It’s trained using the Siamese approach.

Current State of Sentence-BERT

After the introduction to Sentence-BERT, there has been follow-up work on this. Pretty much, they follow the same training procedure while changing with different Transformer models. For example, they would use the RoBERTa instead of the BERT model, as well as DistillBERT, TinyBERT, and so on. So, now we have this array of sentence embedder to choose from.

Please check out this official homepage for the current progress and availability of Sentence-Transformer.

Conclusion

Sentence-BERT aims to produce better sentence embedding than using [CLS] token embedding or out-of-the-box mean pooling. They introduce two methods — Siam modeling and cosine similarity modeling — to construct these models. Right after the introduction of SentenceBERT, there have been many follow-ups working using other variants of Transformer that have other characteristics, like getting similar performance while being more efficient.

References

- Reimers, Nils, and Iryna Gurevych. "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks." Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). 2019.